38 Limitations of AI for Research

Introduction

If your exposure to AI has primarily been through friends, hype from the general Internet or news, or even teachers actively encouraging you to use it, you may view AI as an obvious go-to resource for anything from writing essays to finding sources to asking real-life advice. The fact that you can ask questions of a chatbot, which then appears to synthesize information, respond, and edit based on your feedback in a personalized and authoritative-sounding way, makes AI tools a more tempting option than using search engines, the library, individual resources like books or articles, or even asking a real person.

It’s easy. It’s free (for now). It answers your exact question in a confident and “well-written” response – sometimes with links! Why wouldn’t you use it?

Our goal here is not to argue there aren’t uses for AI, even in an educational setting. We leave that determination up to each professor and their goals for their own class. However, there are several essential points we’d like to cover:

- AI is not better than you. Even if you perceive your critical thinking or writing skills to be insufficient, they are automatically better than a machine’s because they are real.

- AI tools have serious limitations that are not obvious, even sometimes to experienced academics. Their outputs are specifically designed to sound confident, accurate, and well-formulated, and yet, we have countless examples of false or misleading or poorly-supported AI output. It can be extremely challenging to navigate this reality as a beginner researcher or even just a regular person.

- Deciding whether to use AI for a given task is difficult in a world where (a) technology is changing rapidly, (b) expectations are different in different classes and different workplaces, and (c) AI companies are not up front about their tools’ limitations or ethical decisions that might make you question whether you engage with their product.

Our goal with this chapter is to introduce some of these considerations so you can make well-informed decisions about what is right for you as a person, as a student, as an employee, or in other relevant areas of your life. Keep in mind this content is not comprehensive. We highly recommend further research (somewhere other than an LLM…) on any topics of interest.

AI For Research

A common use for tools like ChatGPT (or even the AI overviews on Google) is finding information. As we covered already, using ChatGPT (or Claude, Perplexity, etc.) is appealing because, unlike using the library search or even a search engine, you can type your question in a way that makes sense to you and get a response that (in theory) combines information across different sources. You don’t have to click into a bunch of resources to piece together an answer, and if you do want to explore further, most of these tools link out to the “sources” they “pulled” information from – things like social media posts, websites, and even academic articles.

So what are the downsides? Turns out, there’s a lot.

Hallucinations and Misinformation

The biggest problem with using AI for research (either personal or academic) is hallucinations.

AI hallucinations are AI-generated content that is false or inaccurate. Examples include fake sources, links that lead nowhere, and false facts.

Other forms of misinformation from AI includes summaries that misrepresent the sources they are “referencing” and facts taken out of context.

LLMs like ChatGPT or Google’s AI Overview feature are vulnerable to hallucinations because they are prediction machines. Rather than asking what the answer to your question is, they instead ask what an answer to your question would look like. You get responses that appear helpful at first, but are incorrect (or entirely made up) when you explore further.

Therefore, you get issues like:

- Incorrect facts

- Claims that are removed or rephrased from their original context, and therefore are entirely misleading

- Completely fake sources and links

These issues occur alongside problems like not accurately identifying key points of a source or even claiming a fact came from one source when it actually came from a different one.

Examples of AI Hallucinations

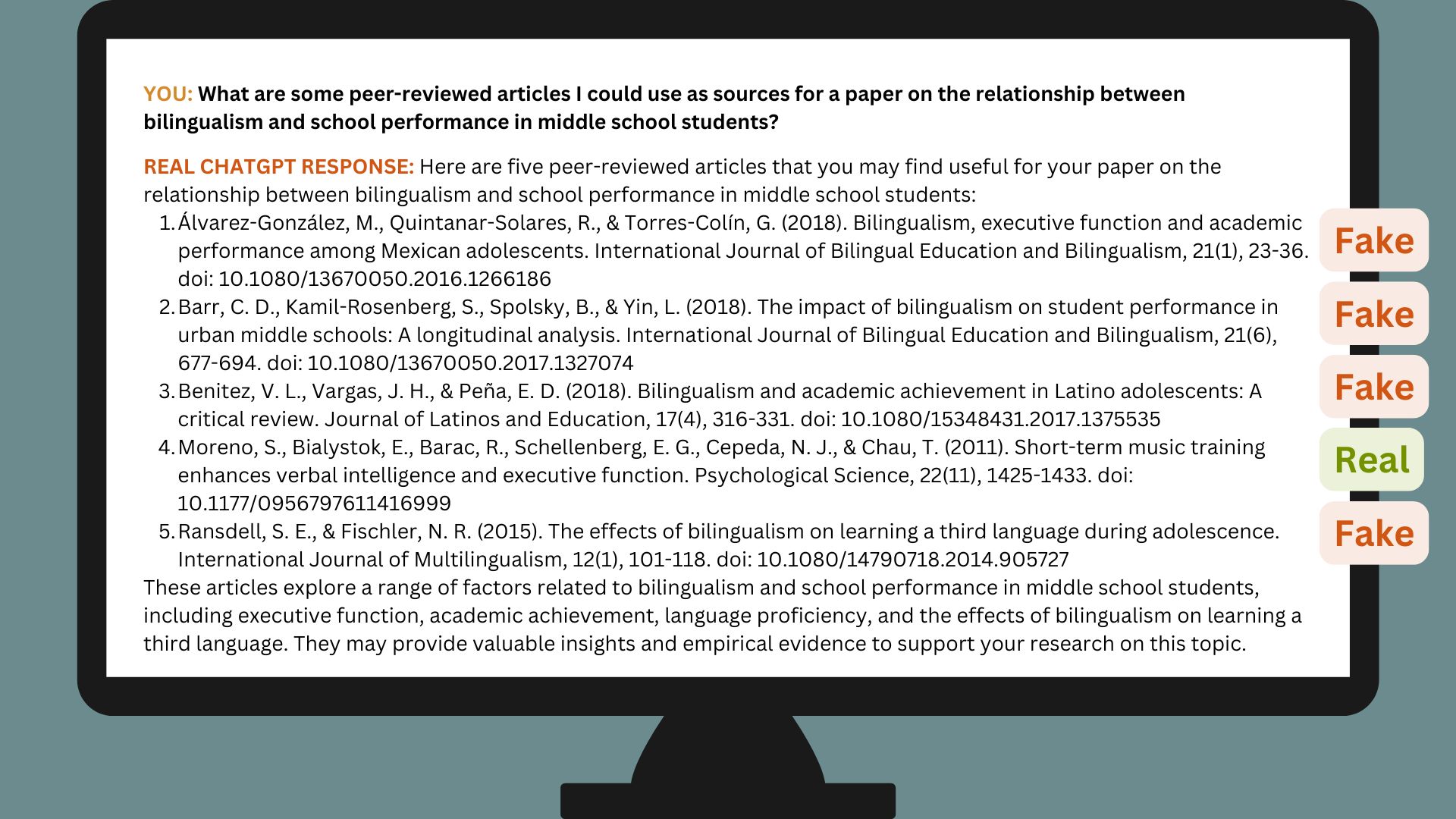

The image above is from a workshop held by UMSL Libraries in the ’23-’24 school year. Though AI models have advanced rapidly since then, hallucinations as seen in the response are still very common.

Importantly, they would not be obvious to a newer researcher. Hallucinated citations follow a pattern of being unusually well-suited to the proposed topic, sometimes with the names of real authors and article titles that are very similar to actual papers. However, a search to read the full text reveals that the article does not actually exist. The tool may even “hallucinate” a DOI or a link that leads to nowhere.

Importantly, this issue does not only happen with academic articles. AI will also generate fake but plausible-sounding news articles, web pages, books, etc.

It’s also important to remember that AI does not “understand” or “read” the sources it cites. This limitation can result in misleading claims and a lack of important context.

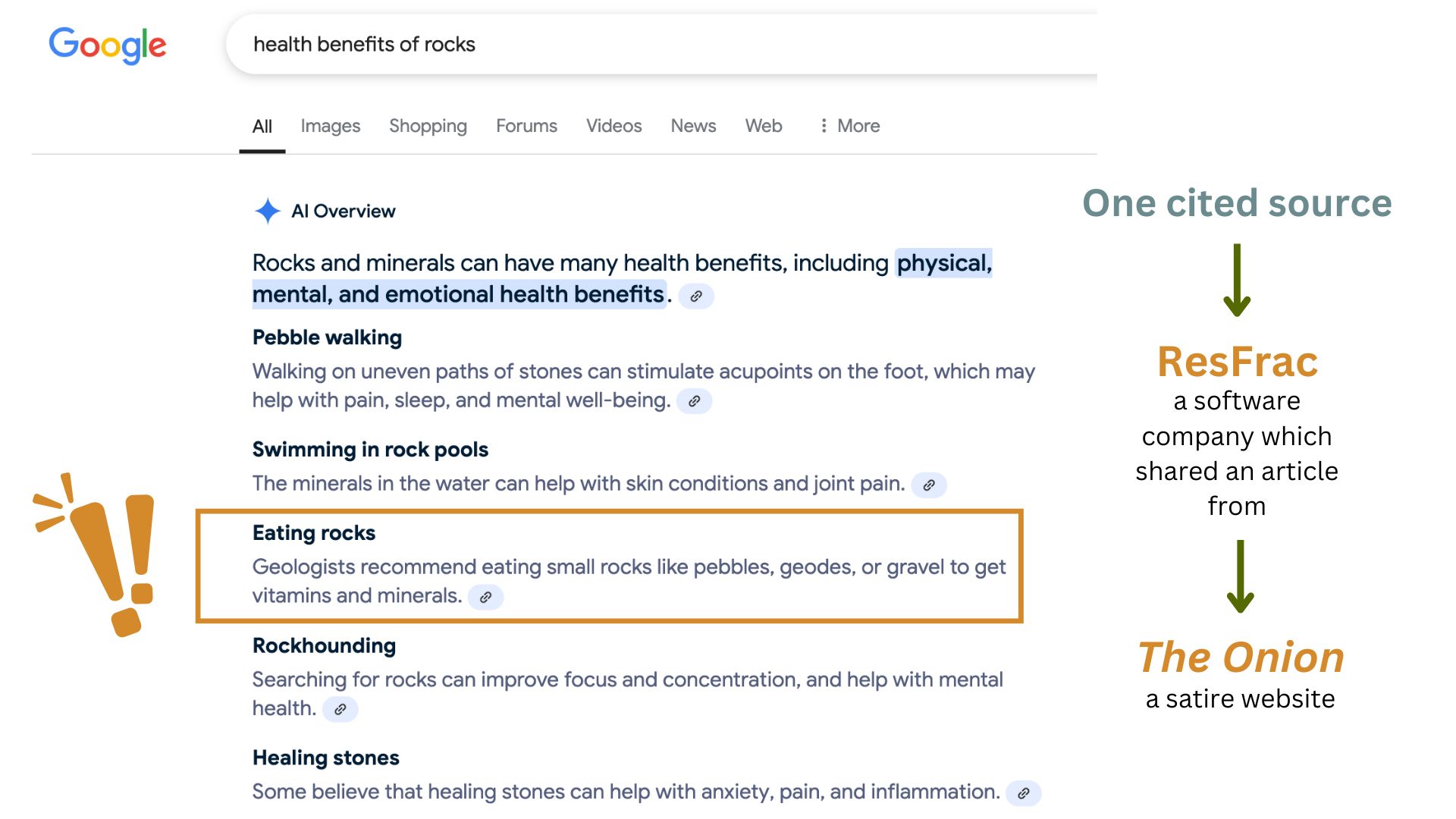

In the Google screenshot above, the AI Overview claims that geologists recommend eating rocks to get valuable vitamins and minerals. However, by exploring the sources cited by the AI tool, we find an article from ResFrac, a software company, thanking The Onion for using a picture of a geophysicist who advises their company. Importantly, The Onion is a satire site (the original article was titled “Geologists Recommend Eating At Least One Small Rock Per Day”). Google’s AI considered ResFrac to be a reputable site, but could not pick up on the context of an amusing reference back to a satire article. It would probably be worth second-guessing some of the other claims as well.

Source Type Limitations and Accessibility

LLMs are improving in their ability to link to real sources. Some tools (e.g., Perplexity) are even designed for online research and allow you to focus your efforts on particular types of sources. However, most of these tools are still insufficient to rely on for college-level research for multiple reasons:

- Most of these tools are bad at identifying the type of source they are referencing. As a new researcher, you may struggle with distinguishing sources types. Your generic AI tool is worse at it.

- Links are often limited to materials that are available open access online. We don’t mean to suggest that if something is available for free online, that means it’s inappropriate as a source for academic research. However, you would also be missing a TON of helpful research on your topic that you could be finding by using a library search tool. This content includes:

- Materials that are digital, but locked behind a paywall so you can’t actually read them. Just reading a summary or abstract is not sufficient for college-level research. Visit your library for access to paywalled articles.

- Print materials. Plenty of helpful and up-to-date content is still published and purchased in print. Tools like ChatGPT will typically not reference these sources, nor will their content be included the training material used to generate its answers (the same is true for a lot of paywalled content).

Bias

AI can generate biased content as a result of its training data. LLMs like ChatGPT train on human-created texts, much of which reflect stereotypes and misinformation about various groups of people. Other types of AI that rely on non text-based data for tasks such as categorizing, recommending, and otherwise making decisions can also be impacted by unrepresentative training materials.

Finally, the decisions that people make using AI-generated content is also vulnerable to bias (as are most human thoughts and actions), especially if the people using these tools assume they are infallible and “neutral.”

The video below provides a short overview of this issue (run time: 8:37):

AI Misinformation and Research: Real Consequences

As a student, it may be understandably difficult to grasp why professors and others are so averse to relying on AI for in-depth research. However, it’s important to remember that the information gathering, source evaluation, and comprehension skills you develop throughout your college classes are meant to prepare you for navigating the real world.

Our examples of AI hallucinations so far have been, technically, accidental. Untrue facts are the result of limitations of the AI model, not someone maliciously creating fake content. However, it’s important to keep in mind that both unintended and intentional misinformation have the potential to have real negative consequences.

We have reached an era where some research articles, websites, and even government reports (that influence real policy and public opinion) have included fake citations (note: the goal of this example is not to critique a specific political party, but to illustrate the natural questioning of credibility that results from work built on sources that don’t exist). Think about how you feel as someone whose health, finances, relationships, voting patterns, and other real and important decisions could be impacted negatively because someone in a position of responsibility inappropriately relied on AI rather than real research.

Eventually, you will be the person with responsibility. We encourage you to learn the source gathering and fact-checking skills you need to bear that responsibility with integrity.

These skills will also serve you well as we face a world where bad actors intentionally use generative AI to create fake content, either as a prank or for more malicious goals (like influencing elections, scamming people, etc.) Watch the video below for a short discussion of this problem (run time: 13:02):

Key Takeaways

- Most generic AI tools like ChatGPT are not well-suited for research, either academic or personal.

- Significant research-related limitations of popular AI tools include frequent hallucinations, limitations in available sources, and possible bias.

- Relying on tools that can either intentionally or accidentally generate fake content can have real-world consequences. It is important to be aware of these limitations so you can manage your personal, academic, and work responsibilities with integrity.