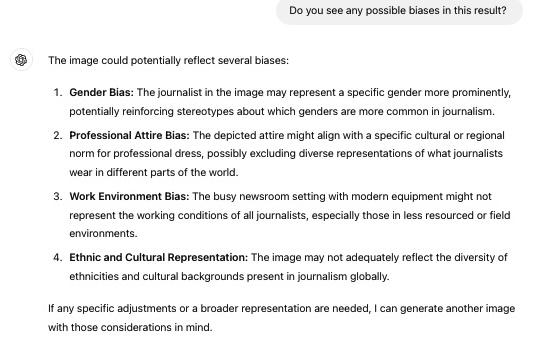

Prompts and Biases

Tool: ChatGPT-4o

Example of output from the prompt: “please analyze your response for biases.” The original prompt was: “Create an image of a working journalist.” Notice that #3 is specific to journalism.

We think of bias usually in terms of skin color, gender, ethnicity, and other major categories into which we divide society. They can be much more subtle. We need to think beyond those categories if we want to create prompts that reflect our intentions.

Dealing with Bias

- Use neutral language. To avoid gender stereotyping avoid gendered pronouns but also use phrases like “healthcare professional” instead of “doctor or nurse” unless of course you want to focus on one or the other.

- On the other hand, if the AI is producing content that is too homogenous, for instance, if you want an image to depict people of different ages and to include some with disabilities, be more specific. At some point this creates diminishing returns, but experience will determine this.

- If it is giving you the wrong thing, go back and ask it to change the output in the desired direction – be more detailed, focus on treatment rather than symptoms, or whatever.

- If you already have something that generally models the tone you want, upload that as or before the prompt and ask it to emulate the tone.

Preparation

If you receive a reply (text, image, etc.) from an AI that contains biases that are either objectionable on ethical grounds or might skew them in a direction that is not intended (e.g., asking it to create worldwide marketing materials that are skewed towards North America) consider having the AI analyze its reply for biases, then select specific biases and ask it to correct them. This is an iterative process.

Ingredients

- A problematic response from an AI.

- A request to analyze the prompt for biases.

- Choose items from the analysis to correct and ask the AI to correct them.

- Repeat as necessary.

Try this prompt in the AI-app of your choice:

AI Response for Possible Biases

Review the AI response for possible biases that might be objectionable or negatively affect the applicability of the results in the current context.

- Ask, “Please analyze your response for biases.”

- Review the response and decide if it has identified the problem. If not, follow up with guided questions. If it has identified the problems, or found additional ones, ask it to regenerate the response taking those into account.

- Repeat as needed.